-

Remember the first time you heard your company was going AI-first? Maybe it came through an all-hands that felt different from the others. The CEO said, “By Q3, every team should have integrated AI into their core workflows,” and the energy in the room (or on the Zoom) shifted. You saw a mix of excitement…

-

We’ve all seen the headlines: a third of US college students say they use ChatGPT for writing tasks at least once a month. The share of US teens turning to the same tool for schoolwork doubled between 2023 and 2024. Generative AI tools overall are a fixture of life for seven out of ten teens.…

-

AI spending in Asia Pacific continues to rise, yet many companies still struggle to get value from their AI projects. Much of this comes down to the infrastructure that supports AI, as most systems are not built to run inference at the speed or scale real applications need. Industry studies show many projects miss their…

-

Alibaba’s recently launched Qwen AI app has demonstrated remarkable market traction, accumulating 10 million downloads in the seven days since its public beta release – a velocity that exceeds the early adoption rates of ChatGPT, Sora, and DeepSeek. The application’s rapid uptake reflects a shift in how technology giants are approaching AI commercialisation. While international…

-

Large language models (LLMs) have astounded the world with their capabilities, yet they remain plagued by unpredictability and hallucinations – confidently outputting incorrect information. In high-stakes domains like finance, medicine or autonomous systems, such unreliability is unacceptable. Enter Lean4, an open-source programming language and interactive theorem prover becoming a key tool to inject rigor and…

-

With its WorldGen system, Meta is shifting the use of generative AI for 3D worlds from creating static imagery to fully interactive assets. The main bottleneck in creating immersive spatial computing experiences – whether for consumer gaming, industrial digital twins, or employee training simulations – has long been the labour-intensive nature of 3D modelling. The…

-

OpenAI has sent out emails notifying API customers that its chatgpt-4o-latest model will be retired from the developer platform in mid-February 2026,. Access to the model is scheduled to end on February 16, 2026, creating a roughly three-month transition period for remaining applications still built on GPT-4o. Sources familiar with the matter emphasized that this…

-

Salesforce launched a suite of monitoring tools on Thursday designed to solve what has become one of the thorniest problems in corporate artificial intelligence: Once companies deploy AI agents to handle real customer interactions, they often have no idea how those agents are making decisions. The new capabilities, built into Salesforce's Agentforce 360 Platform, give…

-

Researchers at Google have developed a new AI paradigm aimed at solving one of the biggest limitations in today’s large language models: their inability to learn or update their knowledge after training. The paradigm, called Nested Learning, reframes a model and its training not as a single process, but as a system of nested, multi-level…

-

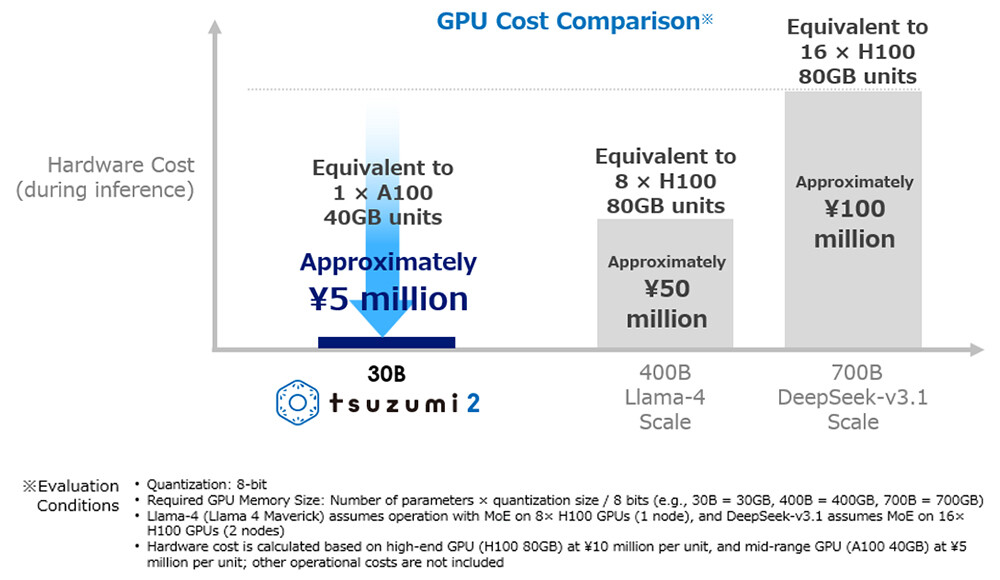

Enterprise AI deployment faces a fundamental tension: organisations need sophisticated language models but baulk at the infrastructure costs and energy consumption of frontier systems. NTT’s recent launch of tsuzumi 2, a lightweight large language model (LLM) running on a single GPU, demonstrates how businesses are resolving this constraint – with early deployments showing performance matching…