-

Researchers at Google have developed a new AI paradigm aimed at solving one of the biggest limitations in today’s large language models: their inability to learn or update their knowledge after training. The paradigm, called Nested Learning, reframes a model and its training not as a single process, but as a system of nested, multi-level…

-

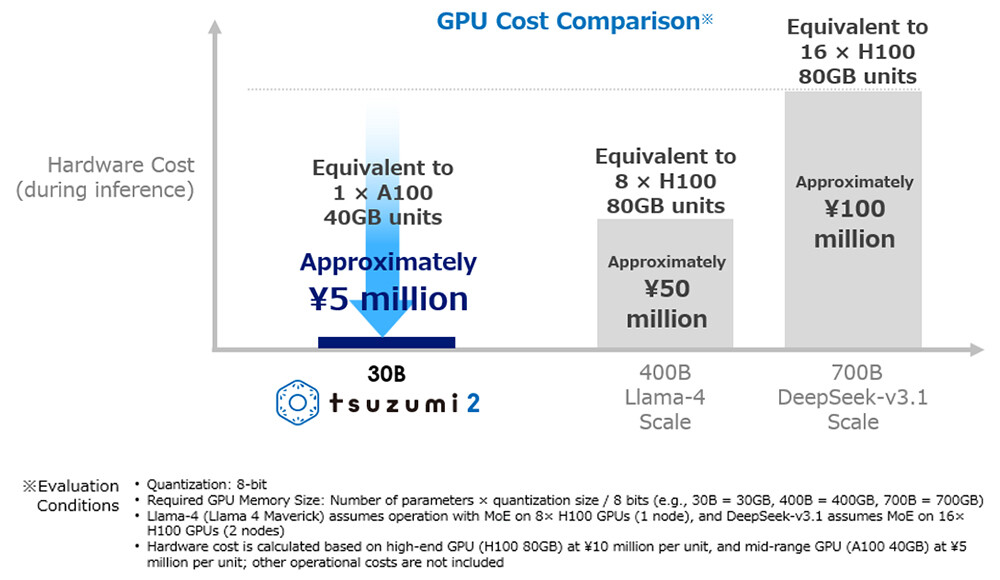

Enterprise AI deployment faces a fundamental tension: organisations need sophisticated language models but baulk at the infrastructure costs and energy consumption of frontier systems. NTT’s recent launch of tsuzumi 2, a lightweight large language model (LLM) running on a single GPU, demonstrates how businesses are resolving this constraint – with early deployments showing performance matching…

-

Many organisations are trying to update their infrastructure to improve efficiency and manage rising costs. But the path is rarely simple. Hybrid setups, legacy systems, and new demands from AI in the enterprise often create trade-offs for IT teams. Recent moves by Microsoft and several storage and data-platform vendors highlight how enterprises are trying to…

-

Choosing the right thermal binoculars is essential for security professionals and outdoor specialists who need reliable long-range detection. Many users who previously relied on the market’s best night vision binoculars now seek advanced thermal imaging for superior clarity, extended range, and weather-independent performance. In 2026, ATN continues to lead the market with cutting-edge thermal binoculars…

-

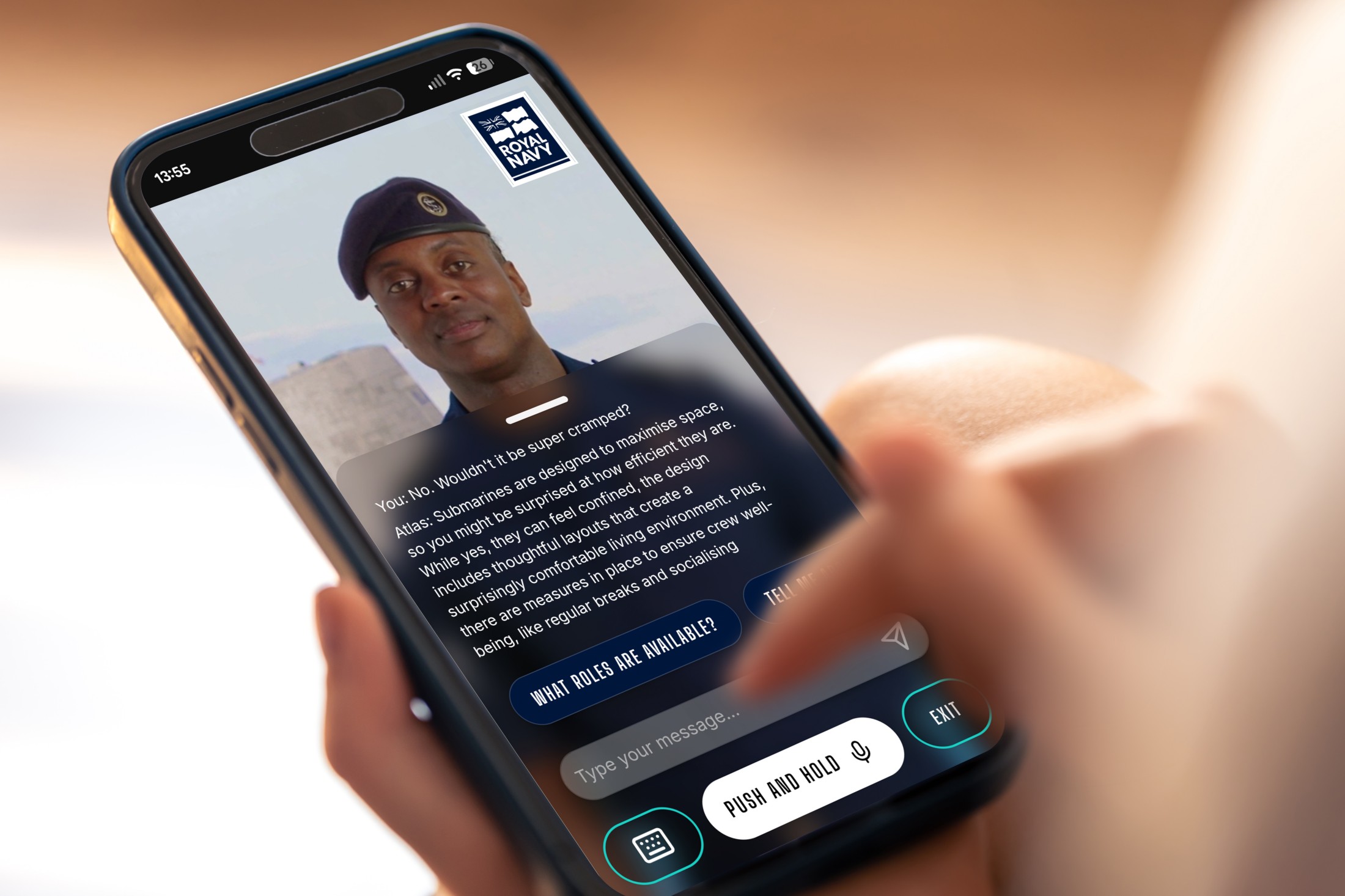

The Royal Navy is handing the first line of its recruitment operations to a real-time AI avatar called Atlas. Atlas is powered by a large language model and has been deployed to field questions from prospective submariners. The deployment shows how AI can support a shift from slow text-based triage to fast and immersive automated…

-

OpenAI has introduced group chats inside ChatGPT, giving people a way to bring up to 20 others into a shared conversation with the chatbot. The feature is now available to all logged-in users after a short pilot earlier this month, and it shifts ChatGPT from a mostly one-on-one tool to something that supports small-group collaboration.…

-

Elon Musk's frontier generative AI startup xAI formally opened developer access to its Grok 4.1 Fast models last night and introduced a new Agent Tools API—but the technical milestones were immediately subverted by a wave of public ridicule about Grok's responses on the social network X over the last few days praising its creator Musk…

-

Infographics rendered without a single spelling error. Complex diagrams one-shotted from paragraph prompts. Logos restored from fragments. And visual outputs so sharp with so much text density and accuracy, one developer simply called it “absolutely bonkers.” Google DeepMind’s newly released Nano Banana Pro—officially Gemini 3 Pro Image—has drawn astonishment from both the developer community and…

-

ScaleOps has expanded its cloud resource management platform with a new product aimed at enterprises operating self-hosted large language models (LLMs) and GPU-based AI applications. The AI Infra Product announced today, extends the company’s existing automation capabilities to address a growing need for efficient GPU utilization, predictable performance, and reduced operational burden in large-scale AI…

-

Researchers at Meta, the University of Chicago, and UC Berkeley have developed a new framework that addresses the high costs, infrastructure complexity, and unreliable feedback associated with using reinforcement learning (RL) to train large language model (LLM) agents. The framework, DreamGym, simulates an RL environment to train agents for complex applications. As it progresses through…