-

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now Blaxel, a startup building cloud infrastructure specifically designed for artificial intelligence agents, has raised $7.3 million in seed funding led by First Round Capital, the company announced Tuesday. The…

-

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now Cloud giant Amazon Web Services (AWS) believes AI agents will change how we all work and interact with information, and that enterprises need a platform that allows them to…

-

Instead of keeping their new MedGemma AI models locked behind expensive APIs, Google will hand these powerful tools to healthcare developers. The new arrivals are called MedGemma 27B Multimodal and MedSigLIP and they’re part of Google’s growing collection of open-source healthcare AI models. What makes these special isn’t just their technical prowess, but the fact…

-

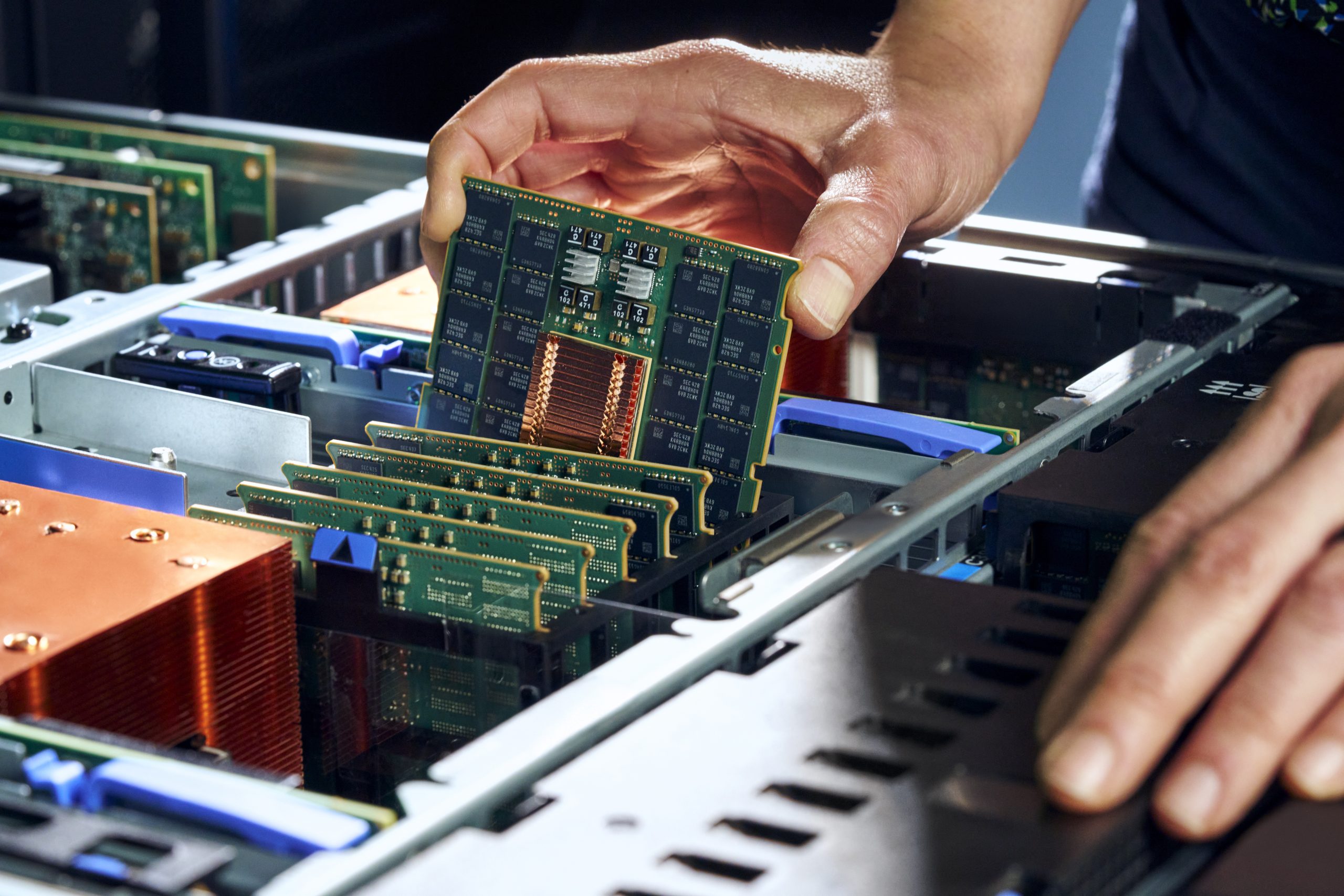

IBM’s Power11 enterprise servers address a persistent challenge in enterprise computing: how to deploy AI workloads without compromising the rock-solid reliability that mission-critical applications demand. Announced on July 8, 2025, the Power11 reflects IBM’s bet that enterprises will prioritise integrated solutions over the current patchwork of specialised AI hardware and traditional servers that many organisations…

-

Despite its traditionally risk-averse nature, the insurance industry is being fundamentally reshaped by AI. AI has already become vital for the insurance industry, touching everything from complex risk calculations to the way insurers talk to their customers. However, while nearly eight out of ten companies are dipping their toes in the AI water, a similar…

-

Author, Eric Elsen, Forte Group. On January 7, 2025, the US Food and Drug Administration (FDA) released draft guidance titled “Artificial Intelligence and Machine Learning in Software as a Medical Device”. The document outlines expectations for pre-market applications and lifecycle management of AI-enabled medical software. While the document may have flown under many readers’ radar,…

-

Nearly every online business now touches artificial intelligence at some point. Research from 2025 shows 78% of companies worldwide use AI for at least one business area. Smaller businesses report higher usage, with 89% saying they use AI each day. Over 280 million businesses worldwide now run at least one AI tool, and many use…

-

The Pentagon has opened the military AI floodgates and handed out contracts worth up to $800 million to four of the biggest names: Google, OpenAI, Anthropic, and Elon Musk’s xAI. Each company gets a shot at $200 million worth of work. Dr Doug Matty, Chief Digital and AI Officer, said: “The adoption of AI is…

-

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now A new study by researchers at Google DeepMind and University College London reveals how large language models (LLMs) form, maintain and lose confidence in their answers. The findings reveal…