Category: AI Category

-

For much of 2025, the frontier of open-weight language models has been defined not in Silicon Valley or New York City, but in Beijing and Hangzhou. Chinese research labs including Alibaba's Qwen, DeepSeek, Moonshot and Baidu have rapidly set the pace in developing large-scale, open Mixture-of-Experts (MoE) models — often with permissive licenses and leading…

-

North American enterprises are now actively deploying agentic AI systems intended to reason, adapt, and act with complete autonomy. Data from Digitate’s three-year global programme indicates that, while adoption is universal across the board, regional maturity paths are diverging. North American firms are scaling toward full autonomy, whereas their European counterparts are prioritising governance frameworks…

-

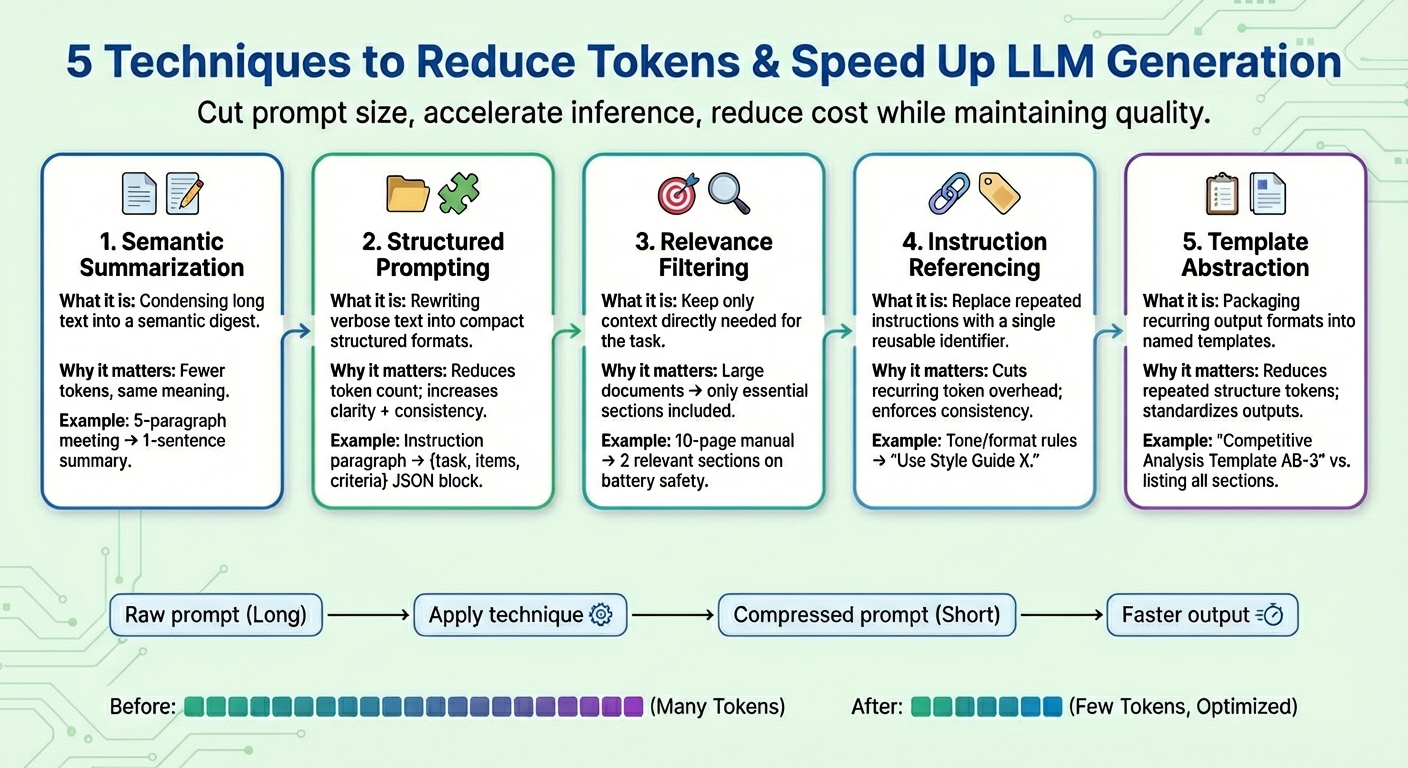

In this article, you will learn five practical prompt compression techniques that reduce tokens and speed up large language model (LLM) generation without sacrificing task quality. Topics we will cover include: What semantic summarization is and when to use it How structured prompting, relevance filtering, and instruction referencing cut token counts Where template abstraction fits…

-

import dataclasses import datasets import torch import torch.nn as nn import tqdm @dataclasses.dataclass class BertConfig: “”“Configuration for BERT model.”“” vocab_size: int = 30522 num_layers: int = 12 hidden_size: int = 768 num_heads: int = 12 dropout_prob: float = 0.1 pad_id: int = 0 max_seq_len: int = 512 num_types: int = 2 …

-

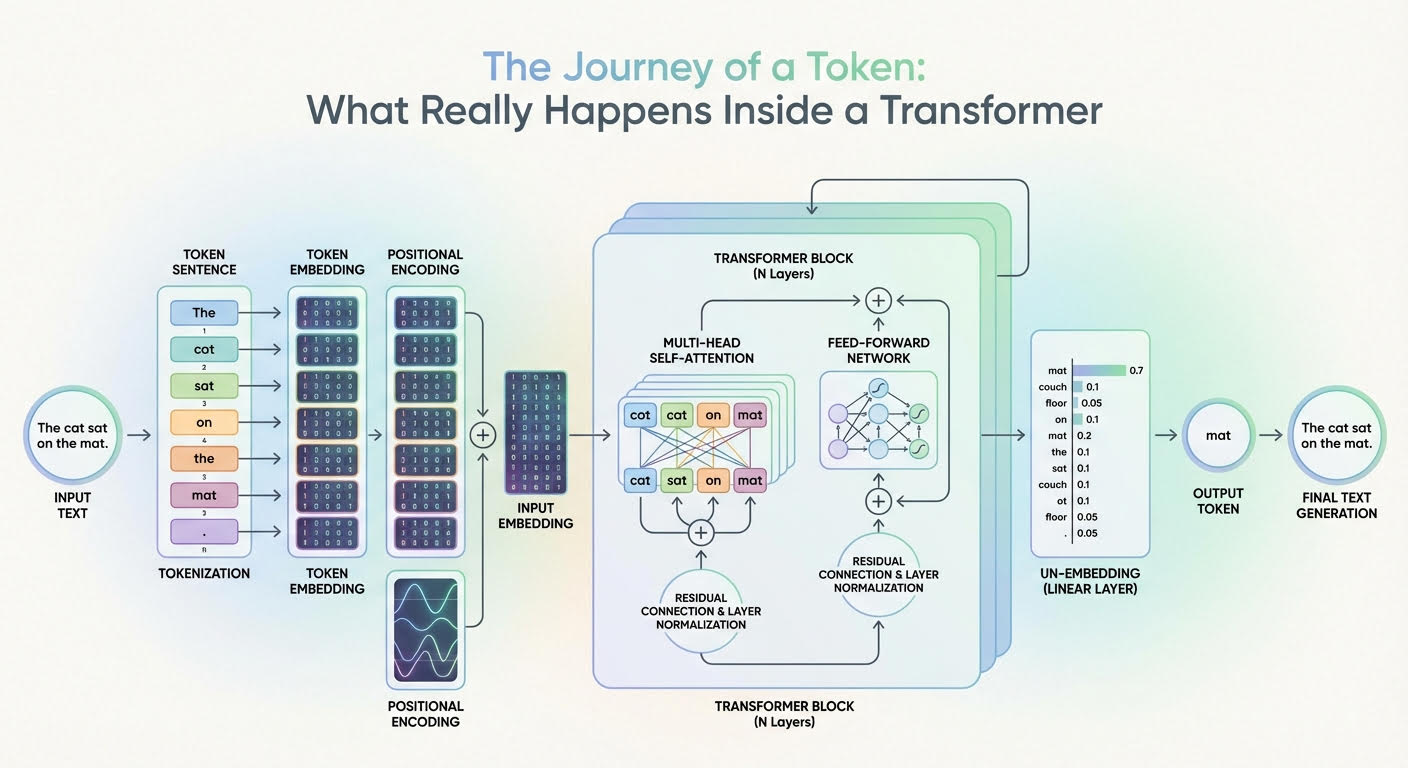

In this article, you will learn how a transformer converts input tokens into context-aware representations and, ultimately, next-token probabilities. Topics we will cover include: How tokenization, embeddings, and positional information prepare inputs What multi-headed attention and feed-forward networks contribute inside each layer How the final projection and softmax produce next-token probabilities Let’s get our journey…

-

Chinese artificial intelligence startup DeepSeek released two powerful new AI models on Sunday that the company claims match or exceed the capabilities of OpenAI's GPT-5 and Google's Gemini-3.0-Pro — a development that could reshape the competitive landscape between American tech giants and their Chinese challengers. The Hangzhou-based company launched DeepSeek-V3.2, designed as an everyday reasoning…

-

When Liquid AI, a startup founded by MIT computer scientists back in 2023, introduced its Liquid Foundation Models series 2 (LFM2) in July 2025, the pitch was straightforward: deliver the fastest on-device foundation models on the market using the new "liquid" architecture, with training and inference efficiency that made small models a serious alternative to…

-

When JPMorgan Asset Management reported that AI spending accounted for two-thirds of US GDP growth in the first half of 2025, it wasn’t just a statistic – it was a signal. The conversation reached a turning point recently when OpenAI CEO Sam Altman, Amazon’s Jeff Bezos, and Goldman Sachs CEO David Solomon each acknowledged market…

-

With some needed infrastructure now being developed for agentic commerce, enterprises will want to figure out how to participate in this new form of buying and selling. But it remains a fragmented Wild West with competing payment protocols, and it's unclear what enterprises need to do to prepare. More cloud providers and AI model companies…

-

When JPMorgan Asset Management reported that AI spending accounted for two-thirds of US GDP growth in the first half of 2025, it wasn’t just a statistic – it was a signal. Enterprise leaders are making trillion-dollar bets on AI transformation, even as market observers debate whether we might be witnessing bubble-era exuberance. The conversation reached…